Transformation, Part 2

Now that we understand constraints a little bit more, let's carry on and buff this table out completely. We'll add a timestamp with time zone so we know when the import happened and then we'll get into some weird stuff with generated columns!

Timestamping when data has been created or updated is a regular occurrence in the developer world and if you're working with Postgres, the definition is simple:

create table products(

--...

created_at timestamptz not null default now(),

updated_at timestamptz not null default now()

);

When preparing data for analysis, it's common to denormalize it in order to speed up the analysis process. It's common to see data pre-calculated and stored, especially if its historical data like ours is. I'm doing exactly this by creating a date and year field.

Postgres makes this kind of thing simple by offering generated fields:

create table inms(

id serial primary key,

created_at timestamp not null,

date date not null generated always as (created_at::date) stored,

year int not null generated always as (date_part('year', created_at)) stored,

--...

);

Whenever the created_at data changes, so will date and year because these are virtual columns that can't be changed directly.

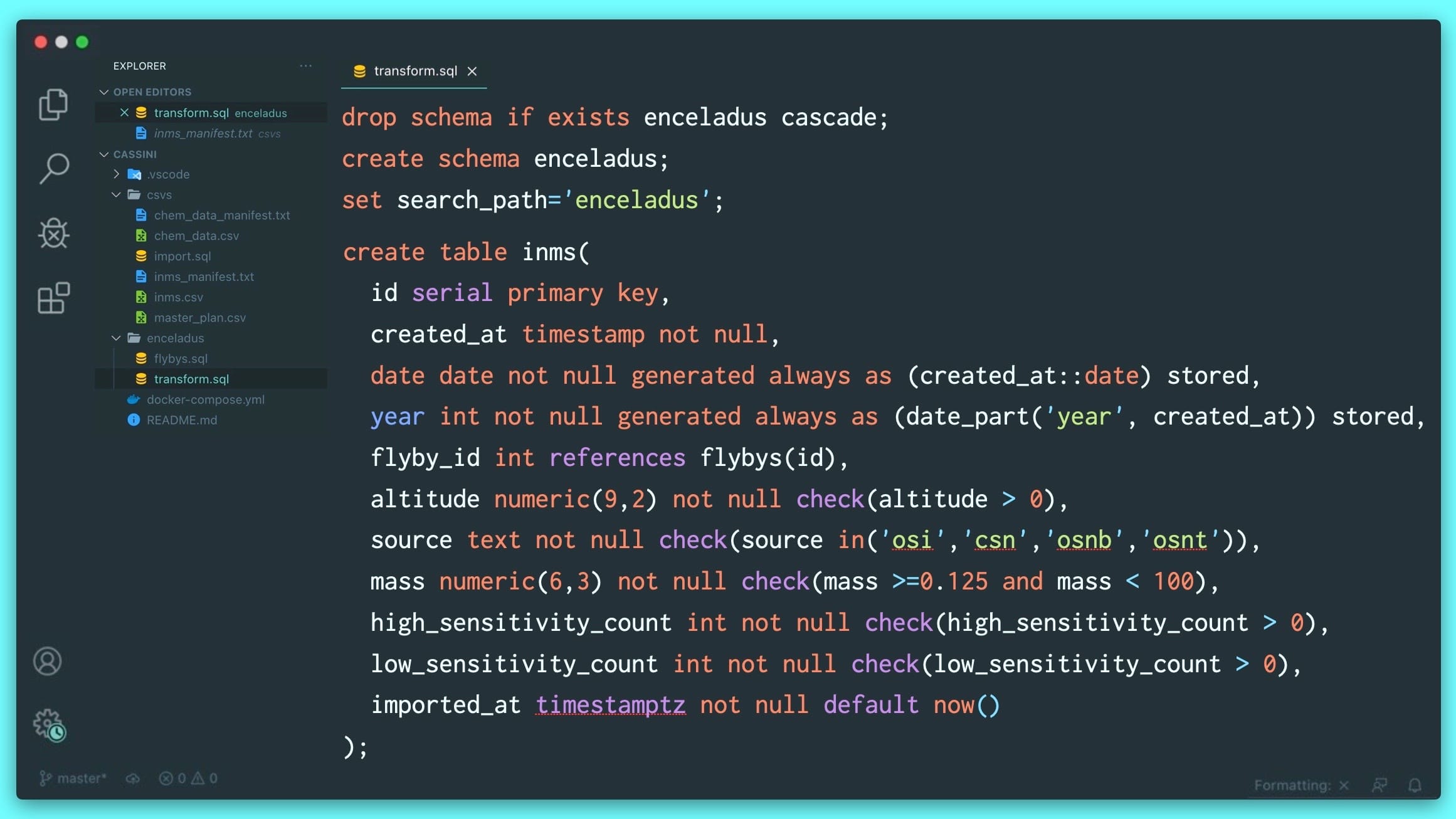

Here's the entire table definition:

drop schema if exists enceladus cascade;

create schema enceladus;

set search_path='enceladus';

create table inms(

id serial primary key,

created_at timestamp not null,

date date not null generated always as (created_at::date) stored,

year int not null generated always as (date_part('year', created_at)) stored,

flyby_id int references flybys(id),

altitude numeric(9,2) not null check(altitude > 0),

source text not null check(source in('osi','csn','osnb','osnt')),

mass numeric(6,3) not null check(mass >=0.125 and mass < 100),

high_sensitivity_count int not null check(high_sensitivity_count > 0),

low_sensitivity_count int not null check(low_sensitivity_count > 0),

imported_at timestamptz not null default now()

);

To insert data into this table we need to execute an insert statement (which is going to fail, but that's OK it's kind of in the plan). Notice that I don't need to insert all of the fields - the generated bits take care of themselves, as does the imported_at timestamp due to its default:

insert into inms(

created_at,

altitude,

source,

mass,

high_sensitivity_count,

low_sensitivity_count

)

select

sclk::timestamp,

alt_t::numeric(9,2),

source,

mass_per_charge::numeric(6,3),

c1counts::int,

c1counts::int

from csvs.inms

where target='ENCELADUS';